Publications

At Seisquare, our mission is to consistently apply stochastic mathematical models to G&G workflows. We translate deterministic G&G processes into stochastic ones, which paves the way to consistent uncertainty quantification and propagation throughout G&G workflows. We believe that this process of uncertainty characterization maximizes the performance of these workflows and the reliability of static/dynamic reservoir models. The end result is confident subsurface predictions, where all predictions are associated with a reliable +/- uncertainty value, and confident E&P decisions. We’ve been publising articles in the main industry journals for the past two decades. Have a look at our contributions below and feel to reach out for more information.

General | Workflow: Reservoir Processing | Workflow: Reservoir Structure | Workflow: Reservoir Properties | Workflow: Reservoir 4D

General

EAGE WORKSHOP JUNE 2024: WHY GEOSTATISTICS CAN BE USEFUL IN SEISMIC PROCESSING AND MODELLING Jean-Luc Mari (Sorbonne Université, Spotlight), Arben Shtuka (Seisquare)

EAGE WORKSHOP JUNE 2024: WHY GEOSTATISTICS CAN BE USEFUL IN SEISMIC PROCESSING AND MODELLING Jean-Luc Mari (Sorbonne Université, Spotlight), Arben Shtuka (Seisquare)

At the feasibility stage of a high-level radioactive waste facility in the eastern Paris Basin, the French National Radioactive Waste Management Agency (Andra) has conducted innovative and extensive characterization of the Callovo-Oxfordian argillaceous rock (Cox) and neighboring Oxfordian and Dogger limestones; High resolution 3D seismic data were used to model the distribution of mechanical and hydrogeological properties of the geological formations. Assessing the reliability of the geophysical modelling is crucial for making decision on the design of the radioactive waste facility. Probabilistic modeling (geostatistics) has been successfully introduced in the geophysical workflow to quantify uncertainty attached to processing and modeling outputs. The result of such a hybrid geophysical-geostatistical processing is increased confidence in seismic interpretation and modeling and reliable operational risk assessment.

OILFIELD TECHNOLOGY SEPTEMBER 2011: TURNING CHANCE INTO PROFIT Luc Sandjivy (Seisquare)

OILFIELD TECHNOLOGY SEPTEMBER 2011: TURNING CHANCE INTO PROFIT Luc Sandjivy (Seisquare)

At a time when oil resources are becoming scarce and more and more costly to produce, operators are looking for better reservoir production and recovery. Managers must derive E&P strategies that combines profitability and sustainability to make the best of the oil resources available. When looking at it more closely, there are two ways for optimizing reservoir management: improved technology and less uncertainty. The technological side is well documented and discussed in all oil forums as it has been the key factor in the past decades for proving additional reserves. The producing life of entire oil basins, such as the North Sea Basin for example, has been drastically lengthened due to technological improvements; is this not addressing the way oil reserves are defined and computed? More information means less uncertainty, but to what extent is this valid and at what cost? This article explores this uncertainty and shows how properly managing uncertainty can lead to profitability and sustainability

EAGE 2014 : PLEA FOR CONSISTENT UNCERTAINTY MANAGEMENT IN GEOPHYSICAL WORKFLOWS TO BETTER SUPPORT E&P DECISION-MAKING Luc Sandjivy (Seisquare) A Shtuka (Seisquare) François Merer (Seisquare)

EAGE 2014 : PLEA FOR CONSISTENT UNCERTAINTY MANAGEMENT IN GEOPHYSICAL WORKFLOWS TO BETTER SUPPORT E&P DECISION-MAKING Luc Sandjivy (Seisquare) A Shtuka (Seisquare) François Merer (Seisquare)

Within its scientific program devoted to the feasibility of a high level radioactive waste facility in the Callovo-Oxfordian argillaceous rock (Cox) of the eastern Paris Basin, the French National Radioactive Waste Management Agency (Andra) has conducted an extensive characterization of the Cox and the Oxfordian and Dogger limestone directly located above and below the target formation. We show how high resolution 3D seismic data have been used to model the distribution of mechanical and hydrogeological properties of the geological formation. Modeling of mechanical parameters such as shear modulus and of hydrogeological parameters such as permeability index (Ik-Seis) indicates weak variability of parameters which confirm the homogeneity of the target formation (Callovo – oxfordian clay). The procedure has been extended to model the Gamma Ray distribution at the seismic scale. The distributions of mechanical and hydrogeological properties of the geological formations (Callovo-Oxfordian and Dogger) are discussed through the XL217 cross line extracted from the Andra 3D block.

Workflow: Reservoir Processing

SEG HOUSTON 2009 : A GENERALIZED PROBABILISTIC APPROACH FOR PROCESSING SEISMIC DATA Arben Shtuka*, Thomas Gronnwald and Florent Piriac, SeisQuare, France

SEG HOUSTON 2009 : A GENERALIZED PROBABILISTIC APPROACH FOR PROCESSING SEISMIC DATA Arben Shtuka*, Thomas Gronnwald and Florent Piriac, SeisQuare, France

The noise removal, wave separation and de-convolution present an important issue in seismic processing. All those seismic processing tasks deal with an observed seismic signal which contains acquisition noises, different kind of wave fields, multiples and the goal is to improve the seismic imaging. Most of the standard processing techniques are based on frequency characteristics of observed signal and the algorithms are almost ‘data driven’. What we propose in this paper is a generalized probabilistic approach based on a geostatistical estimator: the kriging. The kriging provides a general mathematical model known as the best linear estimator. Why using kriging instead of standard processing methods? The answer consists in the fact that the kriging provides a good linear unbiased estimator but also an estimation error which enables to control the quality of the operator and its parameter choices. We illustrate this approach in the case of filtering of seismic gathers, separation of wave fields on vertical seismic profiles and finally an example of application of the de-convolution.

EAGE ROME 2008 : WHY SHOULD WE USE PROBABILITY MODELS FOR SEISMIC DATA PROCESSING? A Case Study Comparing Wiener and Kriging Filters L. Sandjivy, T. Faucon, T. Gronnwald – SEISQUARE / J-L. Mari – IFP SCHOOL

EAGE ROME 2008 : WHY SHOULD WE USE PROBABILITY MODELS FOR SEISMIC DATA PROCESSING? A Case Study Comparing Wiener and Kriging Filters L. Sandjivy, T. Faucon, T. Gronnwald – SEISQUARE / J-L. Mari – IFP SCHOOL

Why should we use probability models for processing seismic amplitude data? How spatial filters such as factorial kriging compare to their deterministic counter parts? It is not easy to answer such questions and to be understood by operational seismic processing geophysicists. This paper starts from a very basic example taken from a shot point processing that enables to derive a straightforward comparison between standard Wiener amplitude filtering and its geostatistical counterpart, a specific factorial kriging model [3] [4]. A more comprehensive well VSP case is being processed that will also be presented. Comparing both filters from theoretical and practical points of view enables to highlight how they both make use of the same trace autocorrelation function known by geophysicists, but not within the same conceptual framework. It is shown that it is possible to reproduce the geophysical Wiener filters using a factorial kriging probability model and that this type of modelling opens the way to quantification of seismic processing uncertainties.

EAGE 2009 : SIGMA PROCESSING OF VSP DATA Thomas Gronnwald – Luc Sandjivy – Robert Della Malva – Arben Shtuka –– SEISQUARE Jean Luc Mari – IFP SCHOOL

EAGE 2009 : SIGMA PROCESSING OF VSP DATA Thomas Gronnwald – Luc Sandjivy – Robert Della Malva – Arben Shtuka –– SEISQUARE Jean Luc Mari – IFP SCHOOL

After having previously successfully compared the factorial kriging filter to their most common geophysical counterparts, a full processing of VSP data using probability modelling (geostatistics) has been developed and named sigma processing of VSP data. A case study is presented where the wavefield separation of upgoing and downgoing waves is operated using a specifically designed kriging filter called gamma filter. The very promising results obtained are related to the robustness and the practical flexibility of the theoretical background of the sigma processing of VSP data.

SEG 2010: SIGMA PROCESSING OF PRE-STACK DATA FOR OPTIMIZING AVO ANALYSIS: A CASE STUDY ON THE FORTIES FIELD Florent Piriac, Arben Shtuka*, Romain Gil, Luc Sandjivy, SEISQUARE, France, Klaas Koster, Apache North Sea

SEG 2010: SIGMA PROCESSING OF PRE-STACK DATA FOR OPTIMIZING AVO ANALYSIS: A CASE STUDY ON THE FORTIES FIELD Florent Piriac, Arben Shtuka*, Romain Gil, Luc Sandjivy, SEISQUARE, France, Klaas Koster, Apache North Sea

The sigma processing of seismic data has already proven its capability to remove noise and separate waves on pre and post stack seismic data (Piriac & al 2010, Gronwald & al 2009). In order to further validate this new technology for assessing the quality and performance of a seismic data processing step, we show in the following the first results of a case study currently underway on the AVO processing of pre-stack data on the Forties field (UK). The preconditioning of pre stack gathers (AVO noise reduction) requires a particular attention so that the amplitude versus offset relationship is not impacted before the AVO analysis (AVO consistency). This is verified with the sigma processing workflow that is presented below and this opens the way to the quality assessment and optimization of the derivation of the AVO attributes.

EAGE 2011: GEOSTATISTICAL SEISMIC DATA PROCESSING: EQUIVALENT TO CASSICAL SEISMIC PROCESSING + UNCERTAINTIES! A. Shtuka* (SeisQuaRe), L. Sandjivy (SeisQuaRe), J.L. Mari (IFP School), J.F.Dutzer (GDF Suez), F. Piriac (SeisQuaRe) & R. Gil (SeisQuaRe)

EAGE 2011: GEOSTATISTICAL SEISMIC DATA PROCESSING: EQUIVALENT TO CASSICAL SEISMIC PROCESSING + UNCERTAINTIES! A. Shtuka* (SeisQuaRe), L. Sandjivy (SeisQuaRe), J.L. Mari (IFP School), J.F.Dutzer (GDF Suez), F. Piriac (SeisQuaRe) & R. Gil (SeisQuaRe)

Geostatistical “filters” using Factorial Kriging are increasingly used for cleaning geophysical data sets from organized spatial noises that are difficult to get rid of by standard geophysical filtering. The understanding and handling of such kind of spatial processing is not easy for geophysicists who are neither used nor trained to handle stochastic models. In this paper we demonstrate the formal equivalence between Factorial Kriging models and usual geophysical filters (Wiener, (F,k), Median) and the added value of stochastic modelling that is the quantification of the quality of the filtering process. Cases studies on pre stack gathers and VSP wave separation illustrate the fact that these stochastic techniques are generic and apply to all filtering contexts.

EAGE 2014: CONSISTENT UNCERTAINTY QUANTIFICATION ON SEISMIC AMPLITUDES Luc Sandjivy (Seisquare) A Shtuka (Seisquare) JL Mari (IFP school ) B Yven (Andra)

EAGE 2014: CONSISTENT UNCERTAINTY QUANTIFICATION ON SEISMIC AMPLITUDES Luc Sandjivy (Seisquare) A Shtuka (Seisquare) JL Mari (IFP school ) B Yven (Andra)

Within its mission to design and build an engineered storage facility for thehigh level radioactive wastes in the eastern Paris Basin, the French National Radioactive Waste Management Agency (Andra) is conducting innovative and extensive characterization of the Callovo-Oxfordian claystone formation (Cox) and surrounding Middle and Upper Jurassic shallow-marine carbonate formations. High resolution 3D seismic data are used to model the distribution of mechanical and hydrogeological properties, of the geological formations (1). Assessing the reliability of the modelling is crucial for making decision on the design of the radioactive waste facility. We show how first assessing the reliability of seismic stacked amplitudes that are input to the geophysical inversion is the cornerstone of the whole model reliability assessment process. Stochastic processing of the pre-stack amplitude gathers enables to compute Stochastic Quality Indexes (SQI) attached to stacked amplitude data. The computation and distribution of SQI results and its contribution to the mechanical and hydrogeological model is discussed.

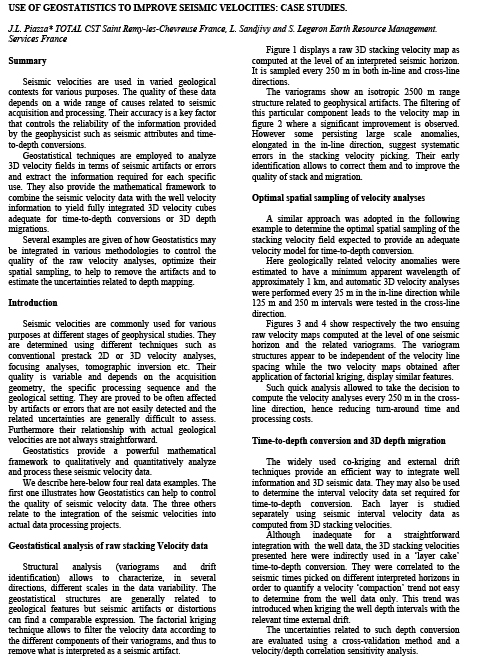

SEG 1997: USE OF GEOSTATISTICS TO IMPROVE SEISMIC VELOCITIES: CASE STUDIES. J.L. PIAZZA* TOTAL CST SAINT REMY-LES-CHEVREUSE FRANCE, L. Sandjivy and S. Legeron ERM.S France

SEG 1997: USE OF GEOSTATISTICS TO IMPROVE SEISMIC VELOCITIES: CASE STUDIES. J.L. PIAZZA* TOTAL CST SAINT REMY-LES-CHEVREUSE FRANCE, L. Sandjivy and S. Legeron ERM.S France

Seismic velocities are used in varied geological contexts for various purposes. The quality of these data depends on a wide range of causes related to seismic acquisition and processing. Their accuracy is a key factor that controls the reliability of the information provided by the geophysicist such as seismic attributes and time-to-depth conversions. Geostatistical techniques are employed to analyze 3D velocity fields in terms of seismic artifacts or errors and extract the information required for each specific use. They also provide the mathematical framework to combine the seismic velocity data with the well velocity information to yield fully integrated 3D velocity cubes adequate for time-to-depth conversions or 3D depth migrations. Several examples are given of how Geostatistics may be integrated in various methodologies to control the quality of the raw velocity analyses, optimize their spatial sampling, to help to remove the artifacts and to estimate the uncertainties related to depth mapping.

EAGE 2000 GLASGOW : QUALITY CHECK OF AUTOMATIC VELOCITY PICKING D. LE MEUR COMPAGNIE GENERALE DE GEOPHYSIQUE, MASSY, FRANCE ; D. Le Meur Compagnie Générale de Géophysique, Massy, France ; C. Magneron Earth Resource Management Services, France.

EAGE 2000 GLASGOW : QUALITY CHECK OF AUTOMATIC VELOCITY PICKING D. LE MEUR COMPAGNIE GENERALE DE GEOPHYSIQUE, MASSY, FRANCE ; D. Le Meur Compagnie Générale de Géophysique, Massy, France ; C. Magneron Earth Resource Management Services, France.

Over the last few years, fast methods for estimating the dense stacking velocity of seismic data has developed. These automated picking methods allow an improvement in the lateral resolution of stack images but are contaminated by mis-picks and artefacts. In this paper, geostatistical techniques are used to analyse this automatic velocity picking, and to perform a spatial quality control of the automatic pick. An example is given of how geostatistical quality control.

EAGE 2003 : SPATIAL QUALITY CONTROL OF SEISMIC STACKING VELOCITIES USING GEOSTATISTICS C. MAGNERON, A. LERON AND L. SANDJIVY ERM.S, 16 rue du Château, 77300 Fontainebleau, France

EAGE 2003 : SPATIAL QUALITY CONTROL OF SEISMIC STACKING VELOCITIES USING GEOSTATISTICS C. MAGNERON, A. LERON AND L. SANDJIVY ERM.S, 16 rue du Château, 77300 Fontainebleau, France

The supervision of stacking velocity data sets, whether hand or automatic picking, is crucial before entering the stack process itself or any other pre-stack or post-stack processing, such as migration or depth conversion. Apart from the internal geophysical quality control of the velocity picks, the control of the spatial consistency of the data set is an additional useful tool for validating the data. Geostatistics provides a quick and operational way of assessing the spatial quality of the stacking velocity data sets through the computation of a Spatial Quality Index (SQI®)1 attached to each velocity pick: High SQI values highlight data that are not spatially consistent with their neighbours. These are easily localised and submitted to the geophysicist in charge of the supervision for interpretation and possible update of the velocity analysis. The case study illustrated below was conducted within the scope of a joint research project with operators and contractors in order to validate the spatial quality control using geostatistics. The SQI results were validated by the geophysicist after a conclusive blind test and stack control. The spatial quality control of seismic velocity data sets is now operational and has already been run on more than 200 data sets. An equivalent spatial quality control of 4D seismic amplitude cubes is now being developed on the same methodological basis.

SBGF 2003: IMPROVING TIME MIGRATION VELOCITY FIELDS USING GEOSTATISTICS L. Sandjivy, A. Léron, O. Torres ERM.S, France, B. Fathi Norsk Agip, Norway

SBGF 2003: IMPROVING TIME MIGRATION VELOCITY FIELDS USING GEOSTATISTICS L. Sandjivy, A. Léron, O. Torres ERM.S, France, B. Fathi Norsk Agip, Norway

The control of the spatial consistency of the velocity fields that are input to the time migration process is crucial as it directly impacts the resulting migrated amplitude data. Small local velocity inconsistencies in the vicinity of a new planned well location may result in unexpected misfit between well records and seismic interpretation. The migration velocity fields are often derived from the existing stacking velocity ones. A smoothing factor is applied to the raw stacking velocity data to ensure the suitable continuity of the migration velocity field that is required for the migration process. It is then advisable to look for a reliable control of the spatial quality of the velocity field input for the migration process. Geostatistics provides a quick and operational way of assessing the spatial quality of the migration velocity fields, and then to improve their derivation by using the relevant spatial filter:

- The computation of a Spatial Quality Index 1 (SQI) attached to each stacking velocity pick helps to locate areas with spatial consistency problems before deriving the migration velocity field.

- The use of a geostatistical filter with parameters specified using the stacking data set (kriging weights) allows optimizing the spatial continuity of the derived migration velocity field.

EAGE 2010: SIGMA PROCESSING OF PSDM DATA – A CASE STUDY F. Piriac (SeisQuaRe), L. Sandjivy* (SeisQuaRe), A. Shtuka (SeisQuaRe), J.L. Mari (IFP) & C. Haquet (Shell)

EAGE 2010: SIGMA PROCESSING OF PSDM DATA – A CASE STUDY F. Piriac (SeisQuaRe), L. Sandjivy* (SeisQuaRe), A. Shtuka (SeisQuaRe), J.L. Mari (IFP) & C. Haquet (Shell)

Stochastic modelling and processing (sigma processing) has been applied to a real PSDM data set (pre and post stack) in order to evaluate its contribution to better imaging and AVO processing. Sigma processing may be considered as a step in a conventional processing sequence. It can be applied on CMP gathers after NMO correction or on pre stack migration common image gathers (CIG). It consists in modelling the experimental spatial autocorrelation function of the data into its “signal” and “noise” components and then look for the best estimate of the “signal” using factorial kriging estimator. The technology breakthrough is its ability to: Easily assess the spatial content of a data set and to check the underlying assumptions of the geophysical processing such as stationarity (no trend), flattening, non correlation of the noise from trace to trace. Optimize the signal to noise ratio within a consistent theoretical framework (kriging) and quantify the quality of the result (kriging variance). When applying it to PSDM data on a real case study, the first objective was to make “familiar” with this stochastic way of processing amplitude data, and to control the consistency of the results with more standard geophysical processes.

EAGE 2004: THE RESERVOIR QUALIFICATION OF SEISMIC DATA USING GEOSTATISTICS AUTHORS : LUC SANDJIVY, ALAIN LERON AND SARAH BOUDON (Seisquare) Earth Resource Management SERVICES 16 rue du château 77300 Fontainebleau France

EAGE 2004: THE RESERVOIR QUALIFICATION OF SEISMIC DATA USING GEOSTATISTICS AUTHORS : LUC SANDJIVY, ALAIN LERON AND SARAH BOUDON (Seisquare) Earth Resource Management SERVICES 16 rue du château 77300 Fontainebleau France

Major new breakthroughs in geophysical acquisition and processing aim at providing reservoir engineers with detailed and reliable data that contribute to reservoir modeling and monitoring. Although a lot of care is taken by operators and contractors of the quality of the geophysical data, from the acquisition scheme to the processing flow-chart, using geophysics for a reservoir issue is still a challenge due to the intrinsic limitations of seismic data when compared to the reservoir static and dynamic properties. Most of the time, the theoretical reservoir signature expected to be highlighted and quantified from seismic attributes is so weak that in practice, it is mixed with all kinds of geophysical artifacts or it is not fully reachable within the domain of validity of the underlying operational geophysical hypothesis. Any attempt to quantify a reservoir character using seismic is then facing a lot of uncertainty that lower its reliability and delay its acceptation by reservoir engineers. The ability of properly handling these uncertainties throughout the operator workflow has become one of the key success factors for reservoir geophysics. This is what we mean by reservoir qualification of seismic data.

Workflow: Reservoir Structure

ISMM 2005: MORPHOLOGICAL SEGMENTATION APPLIED TO 3D SEISMIC DATA Timothée Faucon1,2, Etienne Decencière1, Cédric Magneron2 1Centre de Morphologie Mathématique, Ecole des Mines de Paris 35, rue Saint Honoré, 77305 Fontainebleau, France2Earth Resource Management Services 16, rue du Chateau, 77300 Fontainebleau, France

ISMM 2005: MORPHOLOGICAL SEGMENTATION APPLIED TO 3D SEISMIC DATA Timothée Faucon1,2, Etienne Decencière1, Cédric Magneron2 1Centre de Morphologie Mathématique, Ecole des Mines de Paris 35, rue Saint Honoré, 77305 Fontainebleau, France2Earth Resource Management Services 16, rue du Chateau, 77300 Fontainebleau, France

Mathematical morphology tools have already been applied to a large range of application domains: from 2d grey-level image processing to colour movies and 3D medical image processing. However, they seem to have been seldom used to process 3D seismic images. The specific layer structure of these data makes them very interesting to study. This paper presents the first results we have obtained by carrying out two kinds of hierarchal segmentation tests of 3D seismic data. First, we have performed a marker based segmentation of a seismic amplitude cube constrained by a picked surface called seismic horizon. The second test has consisted in applying a hierarchical segmentation to the same seismic amplitude cube, but this time with no a priori information about the image structure.

EAGE 2006: DIP STEERED FACTORIAL KRIGING L. Sandjivy, C. Magneron, (Seisquare, France),

EAGE 2006: DIP STEERED FACTORIAL KRIGING L. Sandjivy, C. Magneron, (Seisquare, France),

Since the 90’s, geostatistics are increasingly and better integrated in the earth model building process [1]. Geostatistics provide a suitable language and dedicated tools to analyse the spatial coherency of spatial data sets (mono-variate or multi-variate) and therefore lead to more robust interpolations, spatial filters and simulations. More precisely, the factorial kriging (FK) model, a geostatistical filtering technique developed in 1982 by Matheron [2], has been successfully used in seismic processing [3], [4], [5], [6], [7]. Nevertheless, this technique appears to be limited by structural dipping effects when applied to seismic amplitude or related attribute cubes.

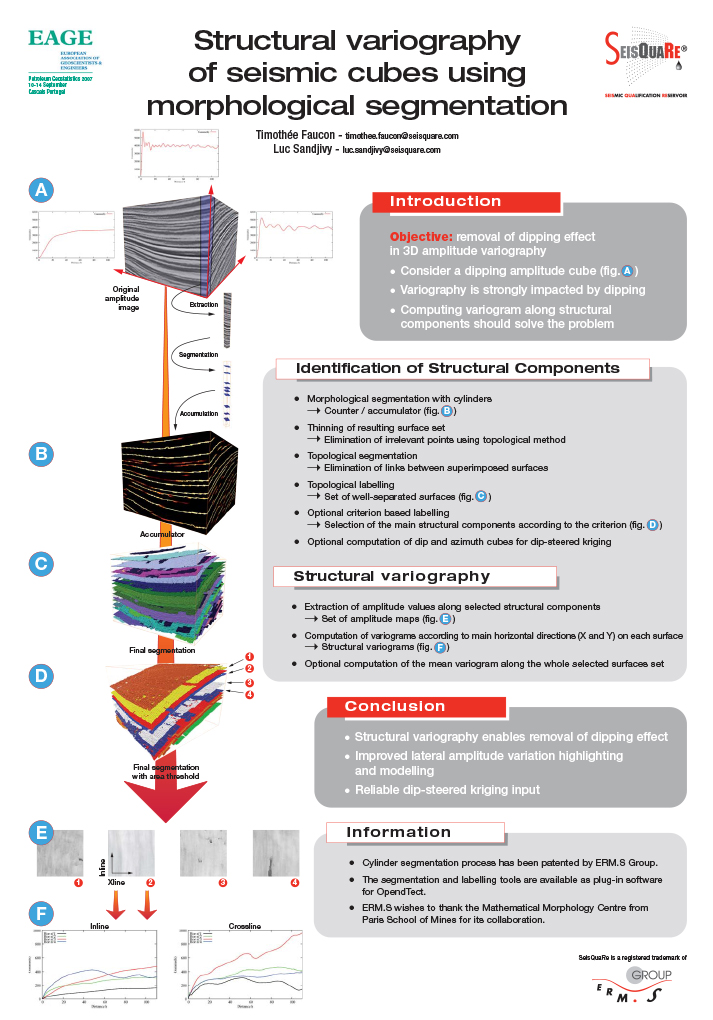

EAGE PETROLEUM GEOSTATISTICS 2007 CASCAIS PORTUGAL : STRUCTURAL VARIOGRAPHY OF SEISMIC CUBES USING MORPHOLOGICAL SEGMENTATION AUTHORS : TIMOTHEE FAUCON, LUC SANDJIVY, (ERM.S 30 av Gal de GAULLE 77210 Fontainebleau AVON France)

EAGE PETROLEUM GEOSTATISTICS 2007 CASCAIS PORTUGAL : STRUCTURAL VARIOGRAPHY OF SEISMIC CUBES USING MORPHOLOGICAL SEGMENTATION AUTHORS : TIMOTHEE FAUCON, LUC SANDJIVY, (ERM.S 30 av Gal de GAULLE 77210 Fontainebleau AVON France)

Objective: removal of dipping effect in 3D amplitude variography Consider a dipping amplitude cube: Variography is strongly impacted by dipping Computing variogram along structural components should solve the problem Structural variography enables removal of dipping effect Improved lateral amplitude variation highlighting and modelling Reliable dip-steered kriging input

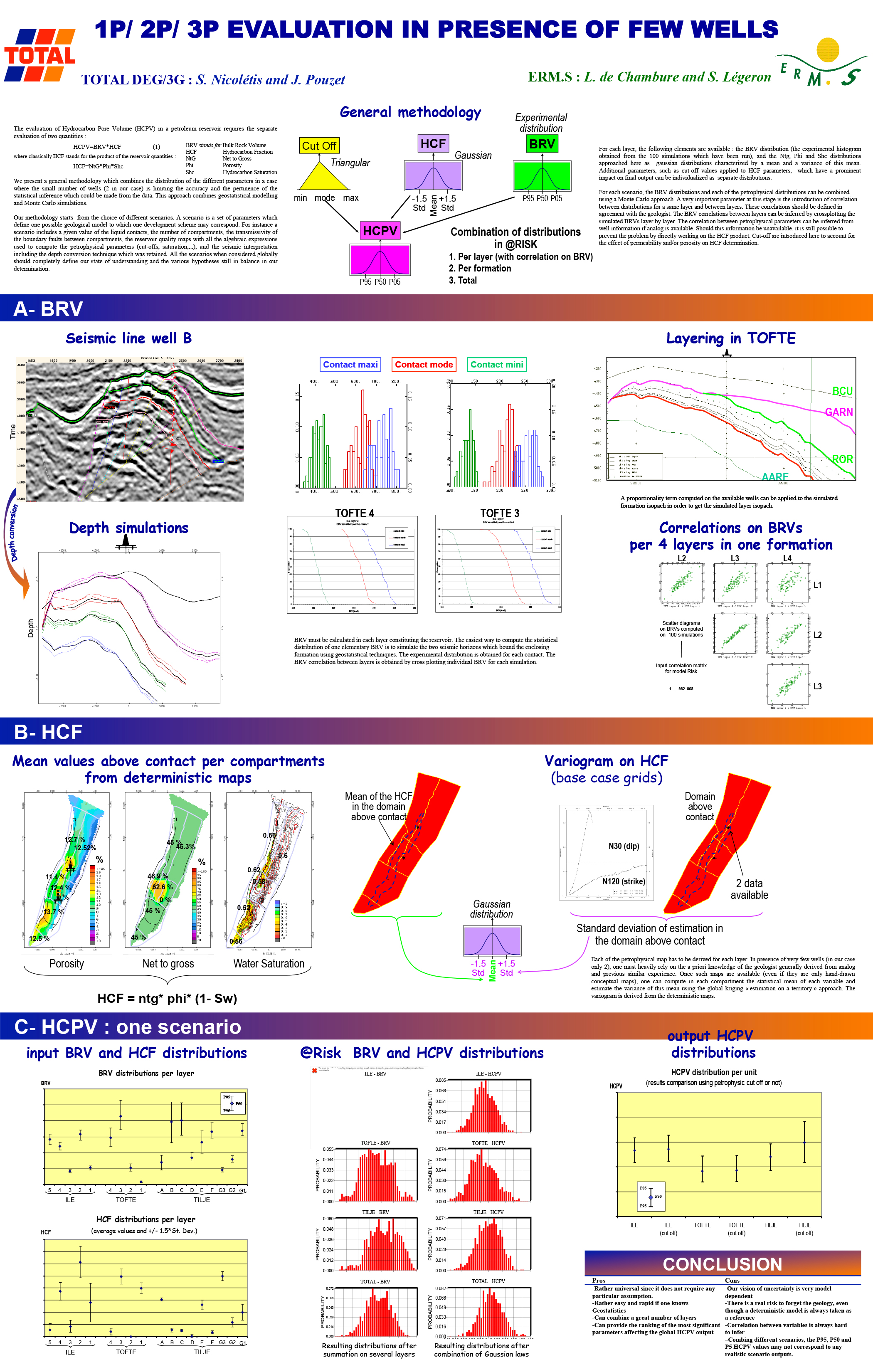

EAGE&SPG 1999 TOULOUSE: 1P/ 2P/ 3P EVALUATION IN PRESENCE OF FEW WELLS TOTAL DEG/3G : S. Nicolétis and J. Pouzet Seisquare L. de Chambure and S. Légeron

EAGE&SPG 1999 TOULOUSE: 1P/ 2P/ 3P EVALUATION IN PRESENCE OF FEW WELLS TOTAL DEG/3G : S. Nicolétis and J. Pouzet Seisquare L. de Chambure and S. Légeron

The evaluation of Hydrocarbon Pore Volume (HCPV) in a petroleum reservoir requires the separate evaluation of two quantities : HCPV=BRV*HCF (1) where classically HCF stands for the product of the reservoir quantities : HCF=NtG*Phi*Shc We present a general methodology which combines the distribution of the different parameters in a case where the small number of wells (2 in our case) is limiting the accuracy and the pertinence of the statistical inference which could be made from the data. This approach combines geostatistical modelling and Monte Carlo simulations. Our methodology starts from the choice of different scenarios. A scenario is a set of parameters which define one possible geological model to which one development scheme may correspond. For instance a scenario includes a given value of the liquid contacts, the number of compartments, the transmissivity of the boundary faults between compartments, the reservoir quality maps with all the algebraic expressions used to compute the petrophysical parameters (cut-offs, saturation,…), and the seismic interpretation including the depth conversion technique which was retained. All the scenarios when considered globally should completely define our state of understanding and the various hypotheses still in balance in our determination.

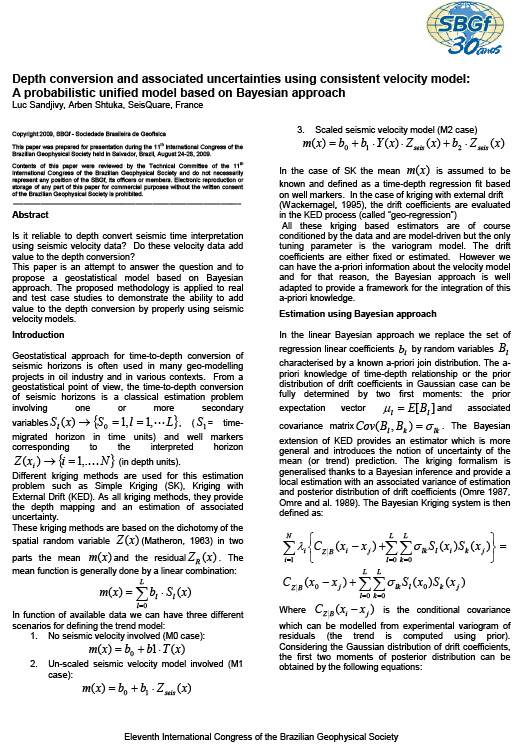

SBGF 2009: DEPTH CONVERSION AND ASSOCIATED UNCERTAINTIES USING CONSISTENT VELOCITY MODEL: A PROBABILISTIC UNIFIED MODEL BASED ON BAYESIAN APPROACH Luc Sandjivy, Arben Shtuka, SeisQuare, France

SBGF 2009: DEPTH CONVERSION AND ASSOCIATED UNCERTAINTIES USING CONSISTENT VELOCITY MODEL: A PROBABILISTIC UNIFIED MODEL BASED ON BAYESIAN APPROACH Luc Sandjivy, Arben Shtuka, SeisQuare, France

Is it reliable to depth convert seismic time interpretation using seismic velocity data? Do these velocity data add value to the depth conversion? This paper is an attempt to answer the question and to propose a geostatistical model based on Bayesian approach. The proposed methodology is applied to real and test case studies to demonstrate the ability to add value to the depth conversion by properly using seismic velocity models.

SYMPOSIUM MONTPELLIER 2012: CLAYS IN NATURAL AND ENGINEERED BARRIERS FOR RADIOACTIVE WASTE CONFINEMENT DEPTH GEOLOGICAL MODEL BUILDING: APPLICATION TO THE 3D HIGH RESOLUTION “ANDRA” SEISMIC BLOCK J.L. Mari 1, B. Yven 2 1. IFP Énergies nouvelles, IFP school, 92852, Rueil Malmaison Cedex, France. 2. Andra, Centre de Meuse/Haute Marne, 55290, Bure, France

SYMPOSIUM MONTPELLIER 2012: CLAYS IN NATURAL AND ENGINEERED BARRIERS FOR RADIOACTIVE WASTE CONFINEMENT DEPTH GEOLOGICAL MODEL BUILDING: APPLICATION TO THE 3D HIGH RESOLUTION “ANDRA” SEISMIC BLOCK J.L. Mari 1, B. Yven 2 1. IFP Énergies nouvelles, IFP school, 92852, Rueil Malmaison Cedex, France. 2. Andra, Centre de Meuse/Haute Marne, 55290, Bure, France

3D seismic blocks and logging data , mainly acoustic and density logs, are often used for geological model building in time. The geological model must be then converted from time to depth. Geostatistical approach for time-to-depth conversion of seismic horizons is often used in many geo modelling projects. From a geostatistical point of view, the time-to-depth conversion of seismic horizons is a classical estimation problem involving one or more secondary variables. Bayesian approach [1] provides an excellent estimator which is more general than the traditional kriging with external drift(s) and fits very well to the needs for time-to-depth conversion of seismic horizons. The time-to-depth conversion of the selected seismic horizons is used to compute a time-to-depth conversion model at the time sampling rate (1 ms). The 3D depth conversion model allows the computation of an interval velocity block which is compared with the acoustic impedance block to estimate a density block as QC. Unrealistic density values are edited and the interval velocity block as well as the depth conversion model is updated.

Workflow: Reservoir Properties

AAPG HEDBERG SYMPOSIUM “APPLIED RESERVOIR CHARACTERIZATION USING GEOSTATISTICS” DECEMBER 3-6, 2000, THE WOODLANDS TEXAS SEISMIC CONTRIBUTION TO SAND BODY TARGETING. A CASE HISTORY FROM THE BONGKOT FIELD (THAILAND). By Jean Luc PIAZZA (TotalFinaElf ), Sarah BOUDON and Luc SANDJIVY (ERM.S*). *ERM.S : Earth Resource Management SERVICES (Fontainebleau – France)

AAPG HEDBERG SYMPOSIUM “APPLIED RESERVOIR CHARACTERIZATION USING GEOSTATISTICS” DECEMBER 3-6, 2000, THE WOODLANDS TEXAS SEISMIC CONTRIBUTION TO SAND BODY TARGETING. A CASE HISTORY FROM THE BONGKOT FIELD (THAILAND). By Jean Luc PIAZZA (TotalFinaElf ), Sarah BOUDON and Luc SANDJIVY (ERM.S*). *ERM.S : Earth Resource Management SERVICES (Fontainebleau – France)

To what extent does the seismic data actually contribute to reduce the uncertainties attached to the reservoir characterization? This is the question we tried to answer after having elaborated a geostatistical model for targeting sand bodies inside a gas-producing field. This modeling step was called “From a seismic litho-cube to an operational reservoir conditioning”: A 3D co-simulation of the sand content (sand proportion) defined at the well sites was performed using a seismic attribute derived from a seismic Litho-cube. (see Ref.1). From a geostatistical point of view, the simulation process was motivated by the fact that the sand body definition was depending on a cut-off parameter applied to the sand content of a grid cell. We suggested at the time that the simulated 3D cubes could prove useful for assisting geologists when implementing new producing wells and computing net sand volumes.

SBGF 2009: EXPANDING THE LIMITS OF FACTORIAL KRIGING APPLICATIONS Carlos Eduardo Abreu, PETROBRAS Catherine Formento, SeisQuaRe

SBGF 2009: EXPANDING THE LIMITS OF FACTORIAL KRIGING APPLICATIONS Carlos Eduardo Abreu, PETROBRAS Catherine Formento, SeisQuaRe

Although major care is usually taken during the steps of acquisition and processing regarding the quality of the final seismic data volumes, reservoir geophysicists still face difficulties related to remaining noise and irreducible indetermination, as observed when calibrating seismicto well data or interpreting seismic attributes for reservoir characterization purposes. Geostatistics offers alternative methods concerning the analysis of the seismic information, using a probabilistic approach based on the analysis of the spatial variability of the data. In this paper, the Factorial Kriging (FK) technique is presented and applied in three different aspects in the seismic reservoir characterization workflow: (i) analysis and decomposition of geometrical attributes to improve fracture mapping; (ii) seismic noise characterization to generate more realistic petro-elastic models in time-lapse feasibility schemes; and (iii) improved time-lapse interpretation strategies by FK decomposition. This filtering technique is suited for spatial analysis and it is shown that a great improvement of seismic data quality is achieved.

SBGF 2011: LOCAL STATIONARY MODELING FOR RESERVOIR CHARACTERIZATION Shtuka Arben, Piriac Florent and Sandjivy Luc (SEISQUARE, France)

SBGF 2011: LOCAL STATIONARY MODELING FOR RESERVOIR CHARACTERIZATION Shtuka Arben, Piriac Florent and Sandjivy Luc (SEISQUARE, France)

When dealing with reservoir characterization using seismic, whatever the algorithm used for inverting the seismic data into reservoir properties, one has to deal with the fact that the reservoir properties varies in space in a way that is controlled by the structural features of the reservoir. There are basically two ways to deal with this kind of non stationarity of the reservoir properties:

- steer the inversion algorithm with a number of moving parameters taking the structural features into account at a local scale

- work on the coordinate system to handle the structural non stationarity The paper shows that only the structural anamorphosis of the coordinate system opens the way to a correct implementation of local stationary models In other words working with moving steering of algorithms will create artifacts as soon as the ranges of reservoir property variations become larger than structural ranges.

An example taken from actual seismic attribute processing illustrates the case.

AAPG 2005 : DEEPWATER SEABED CHARACTERISATION USING GEOSTATISTICAL ANALYSIS OF HIGH DENSITY / HIGH RESOLUTION VELOCITY FIELD Eric Cauquil1, Vincent Curinier2, Stéphanie Legeron-Cherif3, Florent Piriac3, Alain Leron3, and Nabil Sultan4. (1) DGEP/TDO/TEC/GEO, TOTAL, 24 cours Michelet, Paris La Défense, 92069, France, phone: 33 1 41 35 88 58, eric.cauquil@total.com, (2) TOTAL, Paris, (3) ERMS, (4) IFREMER

AAPG 2005 : DEEPWATER SEABED CHARACTERISATION USING GEOSTATISTICAL ANALYSIS OF HIGH DENSITY / HIGH RESOLUTION VELOCITY FIELD Eric Cauquil1, Vincent Curinier2, Stéphanie Legeron-Cherif3, Florent Piriac3, Alain Leron3, and Nabil Sultan4. (1) DGEP/TDO/TEC/GEO, TOTAL, 24 cours Michelet, Paris La Défense, 92069, France, phone: 33 1 41 35 88 58, eric.cauquil@total.com, (2) TOTAL, Paris, (3) ERMS, (4) IFREMER

Deepwater geohazards present geophysical signatures including interval velocity anomalies, with high velocity for gas hydrates / hardground and low velocity for gas bearing sediments. This paper describes a methodology for regional geohazard assessments using geostatistical analysis of high density / high resolution (HDHR) velocity field. A 3D HDHR velocity field is obtained using Total’s internal velocity picking software (DeltaStack3D) that picks velocity automatically at every CDP gather. Time sampling, depending on seismic frequency content, is driven by the definition of constraints along a seed line which are propagated in 3D in order to take into account lateral geological variations. The interval velocity cube is then computed from this RMS velocity field. From this interval velocity field, ERMS applied a standard Spatial Quality Assessment (SQA) procedure using geostatistics, with spatial analysis of velocity data, estimation (factorial kriging) of “possible artefacts” and “geological” spatial components, and derivation of Spatial Quality Index (SQI, patented by ERM.S). Final products are interval velocity charts, where filtered residuals could detect small scale geological features. Velocity anomaly charts have been compared to in-situ geotechnical measurements conducted by IFREMER with PENFELD (deep water CPT). A total of 21 CPT and sonic measurements have been acquired over the study area, allowing detection of gas, gas hydrates layers and carbonate concretions. The comparison between HDHR velocity field and in situ measurements showed a very good correlation. This geostatistical analysis of HDHR velocity field calibrated on in situ measurement provides a relevant methodology for regional deep water seabed characterisation.

MARINE AND PETROLEUM GEOLOGY 53 (2014) 133-153 ELSEVIER : THE APPLICATION OF HIGH-RESOLUTION 3D SEISMIC DATA TO MODEL THE DISTRIBUTION OF MECHANICAL AND HYDROGEOLOGICAL PROPERTIES OF A POTENTIAL HOST ROCK FOR THE DEEP STORAGE OF RADIOACTIVE WASTE IN FRANCE J.L. Mari a, B. Yven b,* a IFP Énergies nouvelles, IFP School, 92852 Rueil Malmaison Cedex, France b Andra, 1-7 parc de la Croix Blanche, 92298 Châtenay-Malabry Cedex, France

MARINE AND PETROLEUM GEOLOGY 53 (2014) 133-153 ELSEVIER : THE APPLICATION OF HIGH-RESOLUTION 3D SEISMIC DATA TO MODEL THE DISTRIBUTION OF MECHANICAL AND HYDROGEOLOGICAL PROPERTIES OF A POTENTIAL HOST ROCK FOR THE DEEP STORAGE OF RADIOACTIVE WASTE IN FRANCE J.L. Mari a, B. Yven b,* a IFP Énergies nouvelles, IFP School, 92852 Rueil Malmaison Cedex, France b Andra, 1-7 parc de la Croix Blanche, 92298 Châtenay-Malabry Cedex, France

In the context of a deep geological repository of high-level radioactive wastes, the French National Radioactive Waste Management Agency (Andra) has conducted an extensive characterization of the Callovo-Oxfordian argillaceous rock and surrounding formations in the Eastern Paris Basin. As part of this project, an accurate 3D seismic derived geological model is needed. The paper shows the procedure used for building the 3D seismic constrained geological model in depth by combining time-to-depth conversion of seismic horizons, consistent seismic velocity model and elastic impedance in time. It also shows how the 3D model is used for mechanical and hydrogeological studies. The 3D seismic field data example illustrates the potential of the proposed depth conversion procedure for estimating density and velocity distributions, which are consistent with the depth conversion of seismic horizons using the Bayesian Kriging method. The geological model shows good agreement with well log data obtained from a reference well, located closest to the 3D seismic survey area. Modeling of the mechanical parameters such as shear modulus, Young modulus, bulk modulus indicates low variability of parameters confirming the homogeneity of the target formation (Callovo- Oxfordia claystone). 3D modeling of a permeability index (Ik-Seis) computed from seismic attributes (instantaneous frequency, envelope, elastic impedance) and validated at the reference well shows promising potential for supporting hydrogeological simulation and decision making related to safety issues.

EAGE 2014 : PLEA FOR CONSISTENT UNCERTAINTY MANAGEMENT IN GEOPHYSICAL WORKFLOWS TO BETTER SUPPORT E&P DECISION-MAKING Luc Sandjivy (Seisquare) A Shtuka (Seisquare) François Merer (Seisquare)

EAGE 2014 : PLEA FOR CONSISTENT UNCERTAINTY MANAGEMENT IN GEOPHYSICAL WORKFLOWS TO BETTER SUPPORT E&P DECISION-MAKING Luc Sandjivy (Seisquare) A Shtuka (Seisquare) François Merer (Seisquare)

Despite tremendous progress in acquisition and processing techniques, geophysical data sets continue to carry uncertainty impacting the performance of processing, interpretation and subsurface property modeling workflows. This paper is a plea for consistent uncertainty quantification and propagation throughout successive geophysical workflows (“Uncertainty Management”), in view of maximizing the performance of geophysical workflows and the reliability of static/dynamic reservoir models. The authors first run through the mathematical framework applied to Uncertainty Management. They then provide an example on how to implement Uncertainty Management in a geophysical workflow: focus is on Gross Rock Volume (and P10, P50, P90 structural depth cases) computations in support of prospect ranking and discovery appraisal.

Workflow: Reservoir 4D

EAGE 2004: IMPROVING 4D SEISMIC REPEATABILITY USING 3D FACTORIAL KRIGING AUTHORS F. JUGLA*1, M. RAPIN1, S. LEGERON2, C. MAGNERON2 , L. LIVINGSTONE3 1TOTAL, 2 Place de la Coupole 92078 Paris La Défense (France) 2SEISQUARE 16 Rue du château, 77300 Fontainebleau

EAGE 2004: IMPROVING 4D SEISMIC REPEATABILITY USING 3D FACTORIAL KRIGING AUTHORS F. JUGLA*1, M. RAPIN1, S. LEGERON2, C. MAGNERON2 , L. LIVINGSTONE3 1TOTAL, 2 Place de la Coupole 92078 Paris La Défense (France) 2SEISQUARE 16 Rue du château, 77300 Fontainebleau

Geostatistical filtering (factorial kriging) was applied to the 1981-1996 time lapse seismic data of the TOTAL UK Alwyn field . The goal was to suppress the noises and acquisition artifacts in both data-sets in order to improve the 4D repeatability. After filtering, the measured coherency (both vertically and spatially) between the two equalized cubes was largely improved. Consequently, the 4D events seen in the interval of production appeared to be better focused which permitted to perform a time lapse interpretation with a much higher level of confidence. (France) 3TOTAL E&P UK PLC, Crawpeel road Altens, AB12 3FG Aberdeen (UK)

SBGF 2005: ATUM FIELD: 4D QUALIFICATION OF 2 SEISMIC VINTAGES USING GEOSTATISTICS L. Sandjivy, C. Magneron, C.Formento (Seisquare, France), Odilon Keller (Petrobras UNRN)

SBGF 2005: ATUM FIELD: 4D QUALIFICATION OF 2 SEISMIC VINTAGES USING GEOSTATISTICS L. Sandjivy, C. Magneron, C.Formento (Seisquare, France), Odilon Keller (Petrobras UNRN)

4D spatial diagnostic and geostatistical filtering (factorial kriging) were applied to the 1987 and 2003 seismic vintages acquired on the PETROBRAS RN Atum field . The goal was to evaluate the suitability of these seismic data to highlight 4D production effects. A spatial analysis (controlled by frequency power spectrum) and factorial kriging were used to quantify and suppress the noises and acquisition artefacts in both data sets in order to improve the 4D repeatability. After spatial filtering, the measured coherency (both vertically and spatially) between the two cubes was largely improved but major amplitudes differences still remained due to the different acquisition processes: streamer 1987 and ocean bottom cable 2003. Consequently, the data were not qualified as such for a further 4D study: Instead, dedicated spatial filters controlled by geophysical frequency spectrum were derived to enable comparison and further amplitude equalization of the data sets. The filtered results are currently being used to increase the confidence level on structural interpretation of both seismic data sets.